TL;DR

If you absolutely have to manually update your glide.lock file to add a specific SHA1 for a dependency and can’t do it right with glide update, edit glide.lock as needed, then:

go get mattfarina/glide-hash

glide hash

This gets the correct checksum for your glide.lock file; update the hash: line at the top. You can now glide install without warnings.

The detailed explanation

Our microservices have a number of dependencies, one of which is logrus. Logrus is a great logging package, but was the trigger of a lot of issues last year when the repository was renamed from github.com/Sirupsen/logrus to github.com/sirupsen/logrus.

That one capitalization change caused havoc in the Go community. If you don’t understand why, let’s talk a little about dependency management in Go. (If you do, skip down to “The detailed fix”).

Go doesn’t have an official dependency management mechanism; when you build Go code, you pretty much expect to compile all the code it will need at once. Go goes have a linker, but generally we really do just build a single static binary from source files, including the source of libraries too. The Go maintainers decided that it’s simpler to store one set of source code to be pulled in and complied rather than store compiled libraries for multiple architectures and figure out which one needs to be pulled in. The Go compiler is pretty fast, and maintaining multiple native binary versions of libraries is hard.

Originally, all source management was done with go get, which would fetch code from a VCS endpoint and put it in the appropriate place in the GOPATH (essentially the location where “stuff related to but not part of this Go program” lives) so that it could be picked up during a compile. This is super simple, but fails in a number of ways: a set of go get commands are a set of commands, and have to be run before the program can be built. This may not be reproducible (if someone makes a new commit to the library, the HEAD changes). Telling go get to fetch a specific version of a library is harder to do. go get is great at pulling a specific isolated library, but not good at managing transitive dependencies: e.g., we’ve installed library foo, but it needs library bar to perform some functions, and bar needs baz to do some of its work. We’d really like to see all of these figured out and installed at once, and to not have to remember what all the dependencies are, or to have to have a script to run to fetch them. We’re potentially running on multiple architectures, and we don’t want to have to maintain multiple executable scripts just to fetch our dependencies.

Go’s first cut at solving this was the vendor directory. This directory lives in the same tree as the Go source and can be committed to the VCS, so one could get the required sources into the vendor library, then commit the “known-good” version. This works for the versioning problem, mostly, but means that it’s easy for many slightly different versions of those libraries to end up spread across multiple source code repositories, and keeping them synced up for fixes is difficult, and it doesn’t address the transitive issues at all. To fix this, the Go community built unofficial source management tools to handle versioned access to the vendor directory plus automated detection and resolution of transitive dependencies.

The problem is that because the Go community is large, inventive, and active, we have a lot of them. We’ve already used two different tools: Godep and, currently, glide, and are probably going to switch to dep, which looks to eventually be the standard dependency management tool blessed by the Go core team. [Update: wrong again. go mod is the current official winner.]

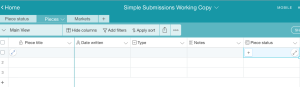

glide (our current tool, as noted) manages dependencies with two files: glide.yaml, which describes enough of the direct dependencies and their versions that all of the dependencies and their own transitive dependencies can be figured out. The glide.lock file stores the results of this dependency resolution as specific VCS commits (SHA1 hashes in the case of Git), allowing us to quickly fetch exactly what we want when getting ready to compile the code.

Like any other piece of software, the glide files have to be kept up to date, especially if there are dependencies on outside libraries (from Github and the like) by periodically doing a glide update to update dependencies in the glide.lock file that aren’t locked to a specific version (or range of versions) by glide.yaml. If one falls behind on this, or a change such as the Sirupsen/logrus to sirupsen/logrus one happens, or you simply need to upgrade something to a new version, these files can end up in a state where a glide install still works, because this simply downloads the revisions dictated by glide.lock without attempting dependency resolution again, but glide update doesn’t, because the glide.yaml didn’t limit the possibilities enough, and attempting resolution of the dependencies fails.

To fix this, we can do it one of two ways:

- The right way, which entails plodding through all the revisions until we’ve found a new set that works, fixed the glide.yaml file so that it defines that new set, and then used glide updateto download them and rewrite glide.lock. This can be excruciatingly difficult, as it’s possible that the updated glide.yaml will no longer resolve, or will resolve the dependencies in ways that won’t actually build, and there will have to be many update/download/compile cycles to actually fix the issue.

- The wrong way, which is to muck around with glide.lock directly, adding or changing something without making sure that glide.yaml “compiles” to the updated glide.lock. This gets us back on track with code that builds and runs, but leaves us in the dangerous situation that glide update is now broken.

The detailed fix

If you näively go the wrong way and just make changes to the glide.lock file, glide tries to be a good citizen and warn you that you’ve done something you ought not to:

[WARN] Lock file may be out of date. Hash check of YAML failed. You may need to run 'update'

appears when you glide install.

As noted, the problem is that if you run glide update, you’ll break everything because you didn’t fix glide.yaml first. And maybe you just don’t have time to find the right incantation to get glide.yaml fixed just now.

So, you lie to glide, as follows.

- Add the dependency to glide.yaml.

- Edit glide.yaml and add the dependency plus its version if it has one. (Use master if you want to track HEAD or a specific SHA1 if you want to pin it to that commit.)

- package: github.com/jszwec/csvutil

version: 1.0.0

- Add the dependency to glide.lock.

- This one must be the SHA1; the easiest way to get this is to go to the repository where it lives and copy it down. I won’t go into detail here, but however it works in your VCS, you’ll need the full SHA1 or revision marker.

- name: github.com/jszwec/csvutil

version: a9cea83f97294039c58703c4fe1937e57ea5eefc

- If we stopped at this point, we’d get a warning from glide install that would recommend that we use glide update instead to install the required libraries. In our case, with a delicate web of dependencies between local libraries and Echo, openzipkin and Apache Thrift, and the two different versions of logrus, a glide update breaks one or more of these dependencies when we try it. To prevent someone else from spending way too much time trying to resolve the problem by juggling versions in the glide.yaml in the hope of creating a stable glide.lock, we need to fix the computed file hash at the top of the glide.lock file so that the warning is suppressed.

This is a hack! The best option is probably to import all the SHA1’s into the glide.yaml file as versions, ensure glide update works, and then gradually relax the constraints until glide update fails again, then back up one step.

To calculate the hash, we can go get mattfarina/glide-hash, which creates a new glide hash subcommand that does exactly that and prints it on the console.

We install the subcommand plugin as noted, then cd to the codebase where we need to fix the glide.lockfile. Once there, we simply issue glide hash, and the command prints the hash we need. Copy that, edit glide.lock, and replace the old hash on the first line with this new one.

Warning!

This is absolutely a stopgap solution. Sooner or later you’re going to need to update one or more of the libraries involved, and you really will want to do a glide update. Yes, you could keep updating this way, but it would be a lot better to solve the problem properly: go through all the dependencies, update the ones you need, and then make the necessary fixes so that your code and the library code are compatible again.